Bit more about Kubernetes(K8s)

Purpose of Kubernetes(k8s)

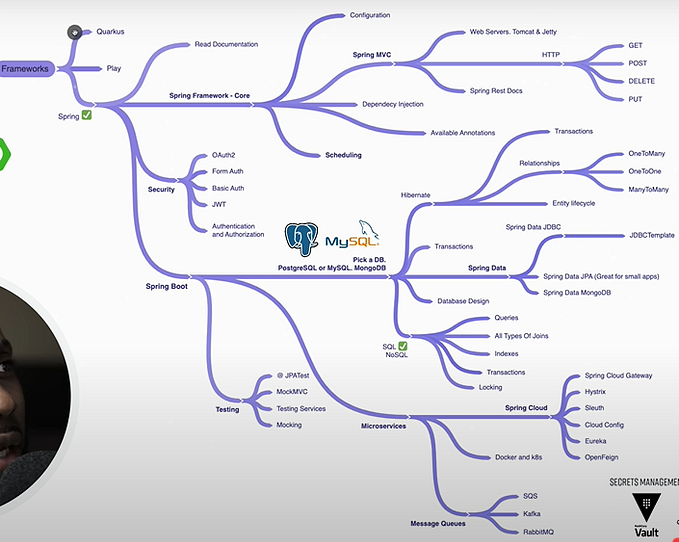

Deciding whether to use Kubernetes (K8s) architecture boils down to understanding your application’s needs and aligning them with what Kubernetes offers. If your application is complex, with multiple services(microservices) or components that need to scale dynamically based on traffic demands, Kubernetes could be a good fit. It’s particularly beneficial for applications requiring high availability, fault tolerance, and efficient resource utilization. Kubernetes also provides flexibility in deployment, allowing you to deploy and manage applications across different environments. However, it’s essential to consider your team’s skills and resources in Kubernetes and container technologies before making the decision. With careful consideration of these factors, you can determine whether Kubernetes is the right choice for your application’s architecture.

- Container Orchestration: Kubernetes automates the deployment, scaling, and management of containerized applications, making it easier to manage complex application architectures composed of multiple containers.

- Scalability: Kubernetes allows applications to scale seamlessly by automatically adjusting the number of containers based on demand, ensuring optimal performance and resource utilization.

- High Availability: Kubernetes ensures high availability by automatically restarting containers that fail, distributing traffic among healthy containers, and providing self-healing capabilities.

- Resource Efficiency: Kubernetes optimizes resource utilization by scheduling containers based on available resources and constraints, allowing for efficient use of compute resources.

- Portability: Kubernetes provides a consistent platform for deploying and managing applications across different environments, including on-premises data centers, public clouds, and hybrid cloud environments.

- Service Discovery and Load Balancing: Kubernetes includes built-in features for service discovery and load balancing, allowing applications to easily communicate with each other and distribute incoming traffic across multiple instances of an application.

- Rolling Updates and Rollbacks: Kubernetes supports rolling updates and rollbacks, enabling seamless updates to applications without downtime and the ability to revert to previous versions if needed.

Explanation of Technically, how the working flow of Kubernetes(K8s)

Kubernetes (K8s), the flow revolves around managing containerized applications efficiently within a cluster environment. It begins with a user defining the desired state of their application using Kubernetes resources like Deployments. These resources are submitted to the Kubernetes API server. The API server then communicates with various controllers, including the Deployment controller, to ensure that the desired state is achieved.

Once the desired state is received, the Kubernetes scheduler kicks in. Its responsibility is to assign pods (the smallest deployable units in Kubernetes) to nodes based on available resources, constraints, and policies. Each node in the cluster runs a kubelet, which is responsible for managing the lifecycle of pods on that node.

Underneath, the container runtime, such as Docker or containerd, handles the actual execution of containers within pods. It pulls container images from registries, creates container instances, and manages their lifecycle.

Kubernetes networking ensures that pods can communicate with each other and external clients. It assigns each pod an IP address and provides networking plugins for routing traffic between pods, load balancing, and handling ingress traffic.

Services abstract away the complexity of networking and load balancing by providing a stable endpoint for accessing pods. ConfigMaps and Secrets manage configuration data and sensitive information, respectively.

Special Technical Key-Points to Understand the Kubernetes(K8s) flow

- Pods: Pods are the smallest deployable units in Kubernetes, representing one or more containers that share the same network and storage resources.

- Containers: Containers encapsulate applications and their dependencies, providing a lightweight and portable runtime environment.

- Images: Containers are instantiated from images, which contain all the necessary components to run the application, including code, dependencies, and configuration.

- Container Registries: Container images are stored in registries like Docker Hub, where they can be accessed and pulled by Kubernetes.

- Deployment: Pods are deployed and managed within Kubernetes clusters, allowing for dynamic scaling and resource allocation based on application requirements.

- Pod Composition: Pods can consist of multiple containers, each serving a specific role within the application, such as the main application container and sidecar containers for logging or monitoring.

- Abstraction: Pods provide an abstraction layer that simplifies container management, allowing developers to define and manage groups of related containers as a single unit.

- Isolation: Containers within pods are isolated from each other and the underlying host system, ensuring consistency and security across different environments.

- Dynamic Lifecycle: Pods are ephemeral and can be created, destroyed, and replaced dynamically, enabling seamless scaling and rolling updates without downtime.

- Deployment: A user creates a deployment object, which specifies the desired state for a set of pods. The deployment controller receives this request.

- API Server: The deployment controller interacts with the Kubernetes API server to create the desired number of pod replicas based on the deployment object.

- Scheduler: The Kubernetes scheduler assigns the newly created pods to available nodes in the cluster based on resource requirements, affinity/anti-affinity rules, and other constraints.

- Kubelet: Each node in the cluster runs a kubelet, which is responsible for managing pods. The kubelet communicates with the API server to receive instructions about which pods to run and manages the pod lifecycle on the node.

- Container Runtime: The container runtime (e.g., Docker, containerd) is responsible for running and managing containers within pods. It pulls container images from registries, creates container instances, and manages container lifecycle operations.

- Networking: Kubernetes networking ensures that pods can communicate with each other within the cluster and with external clients. Each pod gets its own IP address, and Kubernetes provides networking plugins and solutions for routing traffic between pods, load balancing, and ingress routing.

- Ingress: is an API object that manages external access to services within the cluster. It provides a way to route external HTTP and HTTPS traffic to different services based on hostnames and paths. Essentially, an Ingress acts as a layer of abstraction for HTTP(S) routing, allowing you to define rules for directing incoming requests to specific services.

- Kube-Proxy: it is a daemon on each node, manages iptable rules on the host to achieve service load balancing (one of the implementations), and monitors Service and Endpoint changes.

- Service: Kubernetes services provide a way to expose pods internally or externally. A service abstracts away the complexity of managing networking and load balancing for pods. It provides a stable endpoint for accessing pods, enabling seamless communication between different parts of an application.

- ConfigMap and Secrets: Kubernetes manages configuration data and sensitive information using ConfigMaps and Secrets. ConfigMaps store configuration data as key-value pairs, while Secrets store sensitive data such as passwords and API tokens in an encrypted format.

To implement Kubernetes (k8s) on AWS, you’ll typically follow these steps:

1. AWS Account Setup:

Ensure you have an active AWS account with the necessary permissions to create and manage resources.

2. Choose a Kubernetes Distribution:

Decide whether you want to use a managed Kubernetes service like Amazon EKS (Elastic Kubernetes Service) or set up your own Kubernetes cluster on EC2 instances.

3. Amazon EKS (Managed Service):

- Create an Amazon EKS cluster: Use the AWS Management Console, AWS CLI, or AWS SDK to create an Amazon EKS cluster.

- Configure kubectl: Install and configure the Kubernetes command-line tool (

kubectl) to communicate with your EKS cluster. - Launch worker nodes: Create an Amazon EC2 Auto Scaling group with worker nodes using the provided Amazon EKS-optimized Amazon Machine Image (AMI).

- Set up networking: Configure the Amazon VPC, subnets, security groups, and other networking components required for your EKS cluster.

- Integrate with AWS services: Leverage AWS services like IAM for authentication and authorization, Amazon ECR for container registry, and AWS CloudFormation for infrastructure as code.

4. Self-Managed Kubernetes Cluster:

- Choose Kubernetes deployment tool: Decide on a Kubernetes deployment tool such as kops, Kubeadm, or eksctl.

- Provision EC2 instances: Launch EC2 instances to serve as your Kubernetes master and worker nodes. Configure security groups, IAM roles, and other necessary settings.

- Install Kubernetes: Use the chosen deployment tool to install Kubernetes on the provisioned EC2 instances.

- Configure networking: Set up networking components like VPC, subnets, route tables, and internet gateways to enable communication between Kubernetes nodes.

- Configure storage: Choose and configure storage solutions for persistent volumes and storage classes in your Kubernetes cluster.

4. Cluster Management and Optimization:

- Configure node auto-scaling: Set up Amazon EC2 Auto Scaling groups to automatically scale the number of worker nodes based on demand.

- Implement monitoring and logging: Use AWS CloudWatch, Amazon CloudWatch Logs, and other monitoring tools to track cluster performance and application health.

- Enable security features: Implement security best practices, including IAM roles, security groups, network policies, and encryption, to secure your Kubernetes cluster.

- Implement backup and recovery: Set up backup solutions for critical data and implement disaster recovery strategies to ensure business continuity.

6. Application Deployment:

- Package applications: Containerize your applications using Docker or other containerization tools.

- Deploy applications: Use Kubernetes resources like Deployments, Services, Ingresses, and ConfigMaps to deploy and manage your applications on the Kubernetes cluster.

- Implement CI/CD pipelines: Set up continuous integration and continuous deployment pipelines to automate the deployment process and ensure smooth application delivery.

7. Testing and Validation:

- Test cluster functionality: Perform comprehensive testing to validate the correctness, scalability, and reliability of your Kubernetes cluster.

- Conduct security audits: Review security configurations and conduct penetration testing to identify and remediate vulnerabilities.

- Implement best practices: Follow Kubernetes best practices and AWS recommendations to optimize performance, cost, and security.

Technical User-cases for Kubernetes(K8s)

- Container Orchestration: Kubernetes excels at managing containerized applications, automating deployment, scaling, and operations tasks, such as container scheduling, health monitoring, and self-healing.

- Microservices Architecture: Kubernetes provides a robust foundation for building and deploying microservices-based applications, allowing each component to run in its own container and providing features like service discovery, load balancing, and fault tolerance.

- Scalable Web Applications: Kubernetes enables horizontal scaling of applications to handle varying levels of traffic and workload, automatically adding or removing container instances based on demand.

- Continuous Integration/Continuous Deployment (CI/CD): Kubernetes integrates seamlessly with CI/CD pipelines, enabling automated testing, building, and deployment of containerized applications, with features like rolling updates and rollbacks for zero-downtime deployments.

- Hybrid and Multi-Cloud Deployments: Kubernetes supports deployment across multiple environments, including on-premises data centers, public clouds (e.g., AWS, Azure, Google Cloud), and hybrid cloud environments, providing consistency and portability.

- Edge Computing: Kubernetes can be used to deploy and manage containerized applications at the edge, closer to end-users or IoT devices, enabling low-latency processing and improved performance.

- Stateful Workloads: While Kubernetes is commonly associated with stateless applications, it also supports stateful workloads, such as databases, caching systems, and distributed storage solutions, using features like StatefulSets and PersistentVolumes.

- Big Data and Analytics: Kubernetes can be used to deploy and manage big data processing and analytics frameworks, such as Apache Spark, Apache Hadoop, and Elasticsearch, providing scalability and resource isolation.

- Machine Learning and AI: Kubernetes provides a platform for deploying and scaling machine learning models and AI applications, with features like GPU support, distributed training, and model versioning.

- Development and Testing Environments: Kubernetes can be used to create ephemeral development and testing environments, enabling developers to quickly spin up isolated environments for building, testing, and debugging applications.

Real World Examples for Usage in Kubernetes(k8s)

- E-Commerce Platform: A company running an e-commerce platform utilizes Kubernetes to handle varying levels of traffic during peak shopping seasons, ensuring high availability and scalability for their online storefront.

- Finance and Banking: A financial institution employs Kubernetes to deploy and manage microservices-based applications for online banking, payment processing, and fraud detection, ensuring security, compliance, and scalability.

- Media Streaming Service: A media streaming service leverages Kubernetes to deploy and scale its backend infrastructure for streaming video content to millions of users worldwide, with features like content delivery networks (CDNs) and real-time transcoding.

- Gaming Industry: A game development company uses Kubernetes to manage the deployment and scaling of multiplayer online games, handling thousands of concurrent players and ensuring low-latency gameplay across different regions.

- Healthcare and Telemedicine: A healthcare provider adopts Kubernetes to deploy and manage cloud-native applications for electronic health records (EHR), telemedicine platforms, and medical imaging analysis, ensuring data security, compliance, and scalability.

- Travel and Hospitality: A travel booking platform relies on Kubernetes to deploy and scale its reservation system, handling a surge in bookings during peak travel seasons and integrating with third-party services for hotel, flight, and car rental bookings.

- IoT and Edge Computing: A smart city initiative uses Kubernetes to deploy and manage edge computing infrastructure for collecting and analyzing sensor data from IoT devices deployed throughout the city, enabling real-time monitoring and decision-making.

- Software Development and DevOps: A software development company adopts Kubernetes for its development and testing environments, enabling developers to spin up isolated environments for building, testing, and deploying applications using CI/CD pipelines.

- Education and E-Learning: An online education platform utilizes Kubernetes to deploy and scale its learning management system (LMS) and video conferencing tools, facilitating remote learning for students and educators worldwide.

- Manufacturing and Industry 4.0: A manufacturing company employs Kubernetes to deploy edge computing infrastructure for predictive maintenance, quality control, and supply chain optimization, leveraging real-time data analytics and machine learning algorithms.

Common Disadvantages In Kubernetes(K8s)

- Complexity: Kubernetes is quite complicated to set up and manage due to its many moving parts and intricate architecture.

- Resource Intensive: Running Kubernetes requires a lot of computing power and memory, which can be costly.

- Operational Overhead: It adds extra work for your operations team to keep Kubernetes clusters running smoothly, including tasks like monitoring and upgrading.

- Networking Complexity: Configuring networking in Kubernetes can be tricky, especially across different cloud providers or environments.

- Vendor Lock-In: You might become too reliant on one cloud provider if you use their managed Kubernetes service, making it hard to switch later.

- Steep Learning Curve: Learning how to use Kubernetes effectively takes time and effort, which might slow down your development process.

- High Availability Configuration: Making sure your Kubernetes clusters are always available requires careful setup, and mistakes can lead to downtime.

- Security Concerns: Kubernetes needs to be configured correctly to be secure, and any mistakes could leave your systems vulnerable to attacks.

- Limited Windows Support: While Kubernetes is great for Linux, it’s not as well-supported for Windows applications.

- Community Fragmentation: With so many different tools and add-ons available, it can be hard to know which ones are best for your needs, leading to confusion.

Thanks for the Reading …. Stay connect with me.